Kubernetes

What is Kubernetes

Kubernetes (also known as k8s or “kube”) is an open source container orchestration platform that automates many of the manual processes involved in deploying, managing, and scaling containerized applications

-

The origin, functions, and benefits of Kubernetes.

-

Basics of modern application development and container management and orchestration.

-

A look at basic Kubernetes architecture.

-

Factors for you to consider when adopting Kubernetes.

-

Information about how Red Hat OpenShift can help you simplify and scale Kubernetes applications.

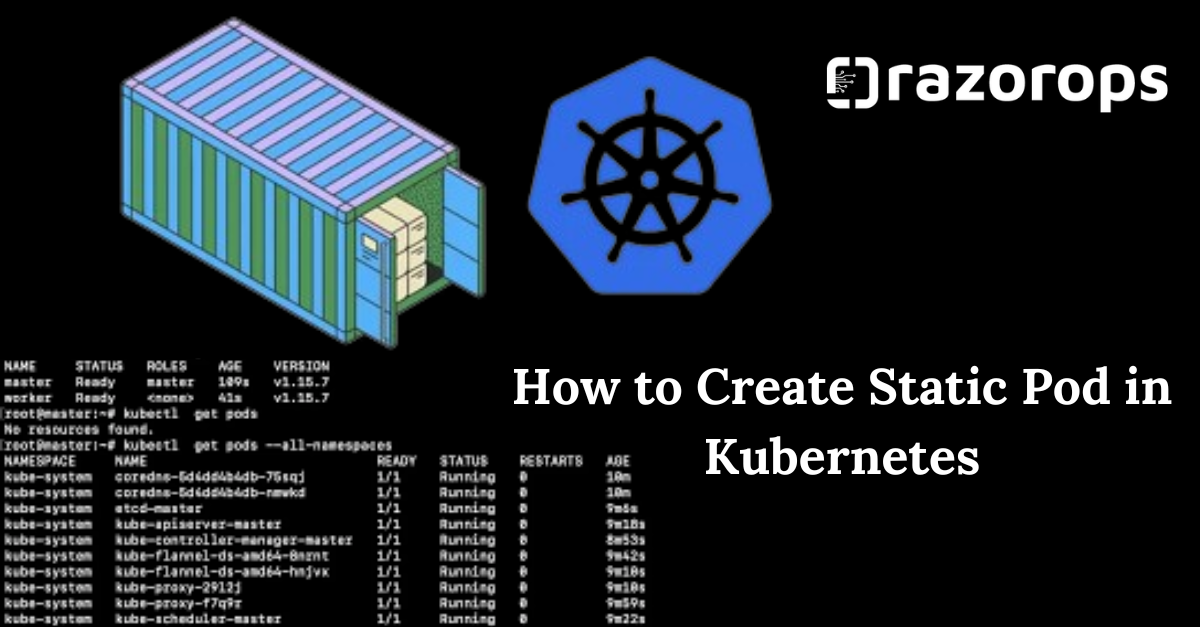

What is a Kubernetes cluster

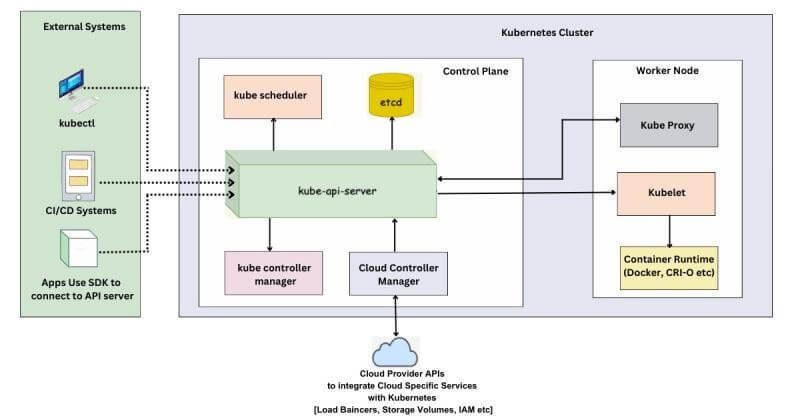

A working Kubernetes deployment is called a cluster, which is a group of hosts running Linux containers. You can visualize a Kubernetes cluster as two parts: the control plane and the compute machines, or nodes.

Each node is its own Linux environment, and could be either a physical or virtual machine. Each node runs pods, which are made up of containers.

The control plane is responsible for maintaining the desired state of the cluster, such as which applications are running and which container images they use. Compute machines actually run the applications and workloads. The control plane takes commands from an administrator (or DevOps team) and relays those instructions to the compute machines.

This handoff works with a multitude of services to automatically decide which node is best suited for the task. Services decouple work definitions from the pods and automatically get service requests to the right pod—no matter where it moves in the cluster or even if it’s been replaced. It allocates resources and assigns the pods in that node to fulfil the requested work.

Kubernetes runs on top of an operating system Red Hat Enterprise Linux, and interacts with pods of containers running on the nodes.

The desired state of a Kubernetes cluster defines which applications or other workloads should be running, along with which images they use, which resources should be made available to them, and other such configuration details.

There is little change to how you manage containers using this type of infrastructure. Your involvement just happens at a higher level, giving you better control without the need to micromanage each separate container or node.

Where you run Kubernetes is up to you. This can be on bare metal servers, virtual machines (VMs), public cloud providers, private clouds, and hybrid cloud environments. One of Kubernetes’ key advantages is it works on many different kinds of infrastructure.

$1000+ Free Cloud Credits to Deploy Clusters

Deploying big clusters on the cloud could be expensive. So make use of the following cloud credits and learn to launch clusters as if you would on a real-time project. All platforms offer managed k8s services.

GKE (Google Cloud – $300 free credits)

EKS (AWS – $300 free POC credits)

DO Kubernetes (Digital Ocean – $200 free credits)

Linode Kubernetes Engine (Linode Cloud – $100 Free credits)

Vultr Kubernetes Engine (Vultr Cloud – $250 Free Credits)

What are Kubernetes labels

Kubernetes labels are key-value string pairs that link identifying metadata to Kubernetes objects. Kubernetes provides teams with integrated support for using labels to retrieve and filter the data from the Kubernetes API and carry out bulk operations on the selected objects.

Many teams use Kubernetes labels to provide DevOps with information about the ownership of a node, a pod, or other Kubernetes objects for easier tracking and operational decision-making.

When creating a new label, you must comply with the restrictions Kubernetes places on the length and allowed values. A label value must:

-

Contain 63 characters or less (a label’s value can also be empty),

-

Start and end with an alphanumeric character (unless it’s empty),

-

Only include dashes (-), underscores (_), dots (.), and alphanumerics

What is Kubernetes used for?

Kubernetes can help you deliver and manage containerized, legacy, and cloud-native apps, as well as those being refactored into microservices.

In order to meet changing business needs, your development team needs to be able to rapidly build new applications and services. Cloud-native development starts with microservices in containers, which enables faster development and makes it easier to transform and optimize existing applications.

Application development with Kubernetes

Production apps span multiple containers, and those containers must be deployed across multiple server hosts. Kubernetes gives you the orchestration and management capabilities required to deploy containers, at scale, for these workloads.

Kubernetes orchestration allows you to build application services that span multiple containers, schedule those containers across a cluster, scale those containers, and manage the health of those containers over time. With Kubernetes you can take effective steps toward better IT security.

Kubernetes also needs to integrate with networking, storage, security, telemetry, and other services to provide a comprehensive container infrastructure.

Kubernetes explained - diagram

Once you scale this to a production environment and multiple applications, it’s clear that you need multiple, colocated containers working together to deliver the individual services.

Linux containers give your microservice-based apps an ideal application deployment unit and self-contained execution environment. And microservices in containers make it easier to orchestrate services, including storage, networking, and security.

This significantly multiplies the number of containers in your environment, and as those containers accumulate, the complexity also grows.

Kubernetes fixes a lot of common problems with container proliferation by sorting containers together into “pods.” Pods add a layer of abstraction to grouped containers, which helps you schedule workloads and provide necessary services—like networking and storage—to those containers.

Other parts of Kubernetes help you balance loads across these pods and ensure you have the right number of containers running to support your workloads.

With the right implementation of Kubernetes and with the help of other open source projects like Open vSwitch, OAuth, and SELinux you can orchestrate all parts of your container infrastructure.

Core Concepts:

Pods: Explain the fundamental unit in Kubernetes, comprising one or more containers.

Services: Detail how Kubernetes abstracts networking, exposing pods to the network.

ReplicaSets and Deployments: Discuss scaling and managing multiple instances of pods.

Labels and Selectors: Explain how Kubernetes manages and organizes resources using metadata.

Key Features:

Automatic Scaling: Discuss Kubernetes’ ability to scale applications based on resource usage.

Self-healing Capabilities: Explain how Kubernetes maintains the desired state of applications.

Rolling Updates and Rollbacks: Detail how Kubernetes enables seamless updates and reversions.

Architecture:

Master and Nodes: Describe the architecture, including the control plane (master) and worker nodes.

Kubelet, Kube-proxy, and etcd: Briefly explain the roles of these components in the Kubernetes ecosystem.

Use Cases:

Microservices Orchestration: Discuss how Kubernetes is ideal for managing microservices-based architectures.

Continuous Deployment: Explain how Kubernetes facilitates CI/CD pipelines for faster software delivery.

Hybrid and Multi-Cloud Deployments: Discuss Kubernetes’ flexibility in running applications across different cloud providers.

Best Practices:

Resource Management:

-

Resource Requests and Limits: Set resource requests and limits for pods to ensure efficient resource utilization and prevent resource starvation.

-

Horizontal Pod Autoscaling: Implement autoscaling based on resource metrics to handle varying workloads efficiently.

Security:

-

RBAC (Role-Based Access Control): Use RBAC to control access to Kubernetes resources and enforce the principle of least privilege.

-

Network Policies: Implement network policies to control traffic flow and enhance security within the cluster.

-

Secrets Management: Store sensitive information like API keys or passwords securely using Kubernetes Secrets.

High Availability and Reliability:

-

Pod Anti-Affinity: Set anti-affinity rules to ensure critical pods are distributed across different nodes for better availability.

-

Pod Disruption Budgets (PDB): Define PDBs to control disruptions during maintenance, ensuring a certain number of pods remain available.

Monitoring and Logging:

-

Prometheus and Grafana: Set up monitoring using tools like Prometheus for metrics collection and Grafana for visualization.

-

Centralized Logging: Use solutions like Elasticsearch, Fluentd, and Kibana (EFK stack) for centralized log collection and analysis.

Cluster Upgrades:

-

Regular Updates: Keep Kubernetes and its components updated to leverage new features, improvements, and security patches.

-

Testing in Staging: Perform upgrades in a staging environment to ensure compatibility before applying changes in production.

Backup and Disaster Recovery:

-

Regular Backups: Create backups of critical data and configurations to recover from potential disasters or data loss scenarios.

-

DR Strategies: Implement disaster recovery strategies to minimize downtime and ensure business continuity.

Optimizing Networking:

-

Container Network Interface (CNI): Choose the right CNI plugin suited for your networking requirements.

-

Service Meshes: Consider using service meshes like Istio or Linkerd for advanced traffic management and observability.

Documentation and Labeling:

-

Clear Documentation: Maintain detailed documentation of configurations, deployments, and operational procedures.

-

Consistent Labeling: Use consistent labels and annotations to organize and manage resources effectively.

CI/CD Integration:

-

Automated Deployment: Integrate Kubernetes with CI/CD pipelines for automated and streamlined deployments.

-

Testing Strategies: Implement robust testing strategies within the CI/CD pipeline to ensure reliability.

Education and Training:

-

Training and Skill Development: Continuously educate the team about Kubernetes updates, best practices, and new features.

-

Hands-on Practice: Encourage hands-on practice and experimentation to deepen understanding and proficiency.

-

Resource Management: Offer tips for efficient resource allocation and utilization.

-

Security: Discuss best practices for securing Kubernetes clusters.

-

Monitoring and Logging: Highlight the importance of monitoring tools and centralized logging for Kubernetes environments.

Getting Started with Kubernetes

Installation: Kubernetes can be installed on local machines for development purposes using tools like Minikube. For production environments, managed Kubernetes services from cloud providers like Google Kubernetes Engine (GKE), Amazon Elastic Kubernetes Service (EKS), or Azure Kubernetes Service (AKS) offer robust solutions.

Learning Resources: Various online tutorials, documentation, and interactive courses are available to help beginners grasp Kubernetes concepts effectively.

Hands-On Experience: Experimenting with deploying sample applications and gradually scaling them on Kubernetes helps in gaining practical knowledge.

Kubernetes in Action

Deployment Strategies: Explore different deployment strategies like rolling updates, canary deployments, and blue-green deployments facilitated by Kubernetes.

Kubernetes strategies encompass a set of approaches and techniques that organizations can adopt to effectively manage, deploy, and scale applications within a Kubernetes environment strategies

Microservices Architecture:

-

Decompose Applications: Break down monolithic applications into smaller, independently deployable microservices.

-

Containerization: Containerize microservices to ensure consistency and portability across different environments.

Infrastructure as Code (IaC):

-

Declarative Configuration: Use tools like Kubernetes YAML files to declare the desired state of your infrastructure.

-

Version Control: Store configuration files in version control systems to track changes and ensure reproducibility.

Continuous Integration and Deployment (CI/CD):

-

Automated Pipelines: Implement CI/CD pipelines for automated building, testing, and deployment of applications to Kubernetes clusters.

-

Rolling Updates: Enable continuous delivery with rolling updates to ensure minimal downtime during application updates.

Scalability and Auto-scaling:

-

Horizontal Pod Autoscaling (HPA): Automatically scale the number of pods based on defined metrics like CPU or memory usage.

-

Cluster Autoscaler: Automatically adjust the size of the cluster to accommodate changes in demand.

High Availability (HA):

-

Multi-Node Clusters: Deploy Kubernetes clusters across multiple nodes and availability zones to ensure high availability.

-

Pod Anti-Affinity: Distribute critical pods across different nodes to enhance fault tolerance.

Service Mesh:

-

Istio, Linkerd: Implement a service mesh for advanced traffic management, security, and observability.

-

Traffic Splitting: Gradually roll out new versions of services using traffic splitting for canary releases.

Observability and Monitoring:

-

Prometheus, Grafana: Set up monitoring tools for collecting and visualizing metrics.

-

Centralized Logging: Implement centralized logging for efficient troubleshooting and debugging.

Security Best Practices:

-

Role-Based Access Control (RBAC): Enforce least privilege access using RBAC to control user permissions.

-

Pod Security Policies: Implement policies to define security constraints on pods.

Immutable Infrastructure:

-

Avoid Configuration Changes: Treat infrastructure as immutable, and avoid making changes to running instances.

-

Rollback Strategies: Easily roll back to a previous known-good state in case of issues.

Cost Optimization:

-

Resource Quotas: Set resource quotas to prevent resource hogging and control costs.

-

Right-sizing: Continuously monitor resource usage and adjust cluster capacity based on actual needs.

Disaster Recovery:

-

Backups: Regularly back up critical data and configurations to recover from data loss or cluster failures.

-

Multi-Region Deployments: Consider deploying clusters in multiple regions for added resilience.

Service Discovery and Load Balancing: Kubernetes provides built-in mechanisms for service discovery and load balancing, ensuring efficient communication between microservices.

Load Balancer in kubernetes

In Kubernetes, a Load Balancer is a crucial component for distributing network traffic across multiple pods or nodes to ensure high availability and optimal resource utilization. Kubernetes provides an abstraction for load balancing through the Service resource.

Service Resource:

-

Type LoadBalancer: When you create a Kubernetes Service and specify its type as LoadBalancer, Kubernetes interacts with the underlying cloud provider (e.g., AWS, Azure, GCP) to provision a load balancer.

Load Balancer Provisioning:

-

External IP: The cloud provider assigns an external IP address to the LoadBalancer Service, which is used to route external traffic.

-

Port Configuration: You can configure the load balancer to forward traffic on specific ports to the corresponding ports on the pods.

NodePort and ClusterIP:

-

NodePort: Alternatively, you can expose services using NodePort, which opens a specific port on each node and forwards traffic to the service.

-

ClusterIP: Services can also be exposed using ClusterIP, which provides an internal IP that is accessible only within the cluster.

Routing Traffic:

-

Service Discovery: Kubernetes uses DNS to provide a hostname for the LoadBalancer, allowing clients to discover the IP addresses of the pods.

-

Round Robin or Session Affinity: The Load Balancer can distribute traffic in a round-robin fashion or use session affinity to route requests from the same client to the same pod.

Health Checks:

-

Readiness and Liveness Probes: Define probes to check the readiness and liveness of individual pods. The Load Balancer can avoid routing traffic to unhealthy pods.

Annotations and Configuration:

-

Annotations: Cloud-specific annotations can be used to configure advanced settings on the load balancer, such as SSL termination, connection draining, or custom timeouts.

External DNS:

-

Integration with External DNS Services: Integrate the LoadBalancer Service with external DNS services to map a custom domain to the dynamically assigned external IP.

Ingress Controllers:

-

Ingress Resources: For more advanced routing and configuration, Ingress resources can be used with Ingress controllers. Ingress provides a way to expose HTTP and HTTPS routes to services.

-

Ingress Controllers: Third-party or cloud-specific Ingress controllers manage the external load balancer and handle routing based on Ingress rules.

Securing Traffic:

-

TLS Termination: Configure TLS termination at the LoadBalancer level for secure communication.

-

Network Policies: Implement network policies to control and secure communication between pods.

Scaling and Availability:

-

Scaling: As the number of pods or nodes changes, the Load Balancer adapts to the new configuration.

-

High Availability: Load Balancers themselves are designed to be highly available, providing redundancy and failover mechanisms.

Cost Considerations:

-

Cost Management: Depending on the cloud provider, there may be costs associated with LoadBalancer resources. Be mindful of cost considerations, especially in large deployments.

Monitoring and Logging: Learn about Kubernetes-native tools like Prometheus and Grafana for monitoring cluster health, resource usage, and application performance.

Monitoring Tools :

1. Prometheus and Grafana:

Prometheus

- You can describe Prometheus and its role in collecting metrics Prometheus

Grafana

- Explain how Grafana complements Prometheus by providing visualization Grafana

2. Centralized Logging (ELK Stack):

Elasticsearch

- Describe Elasticsearch as a distributed search and analytics engine Elasticsearch

Logstash

- Explain Logstash as a server-side data processing pipeline Logstash

Kibana

- Describe Kibana for data visualization and exploration Kibana

3. Container Network Interface (CNI):

Calico

- Explain how Calico provides networking and network security for containers Calico

4. Service Mesh (Istio):

Istio

- Describe Istio as a service mesh that provides features like traffic management and security Istio

5. Container Orchestration (Kubernetes):

Kubernetes

- Include the Kubernetes logo to visually represent the Kubernetes component Kubernetes

Kubernetes has revolutionized the way modern applications are developed, offering a scalable, resilient, and portable platform for managing containerized workloads. Embracing Kubernetes empowers teams to build and deploy applications efficiently, leading to increased agility and innovation in software development. Follow RazorOps Linkedin Page Razorops, Inc.