Diving into Container Registries- An In-Depth Overview

Table of contents:

- What are containers ?

- What is the difference between container and Virtual machine?

- What is Docker Container?

- Explain Docker container architecture?

- What is a Docker client?

- What is Dockerfile and how to create dockerfile?

- What is a docker host?

- What is Docker Daemon?

- What is Docker Images or Container Images?

- How to create Docker images?

- What is a Container Registry?

- Where to store Docker Images?

- How to write an optimized Docker file?

- Why do we need a container registry?

- What are the Advantages and disadvantages of docker registries?

- Conclusion

In the context of utilizing physical servers, the complete resources often remain underutilized, and even in the case of laptops, optimal resource utilization is not achieved. To address this challenge, technological advancements have led to the introduction to containers .

What are containers ?

Containers have their own host operating system. It provides the essential resources and services that containers need to run, such as process isolation, file systems, and networking.Containers do not include a full Operating system, that’s why containers are lightweight and all dependencies will be used from the host operating system and are often preferred for microservices architectures, rapid scaling, and deployment consistency.

Containers provide process isolation, allowing applications to run independently of each other on the same host. This isolation is achieved through features like namespaces, which create a separate view of the system resources for each container.

Containers encapsulate an application and its dependencies, including libraries and runtime. This ensures portability across different environments, making it easy to run the same containerized application on a developer’s laptop, a testing server, or in a production environment.

Container packages application code, runtime, library dependencies and system dependencies which are required to run the application.

Here are some notable container technologies: Docker, Podman, rkt (Rocket), containerd, CRI-O, LXC (Linux Containers).

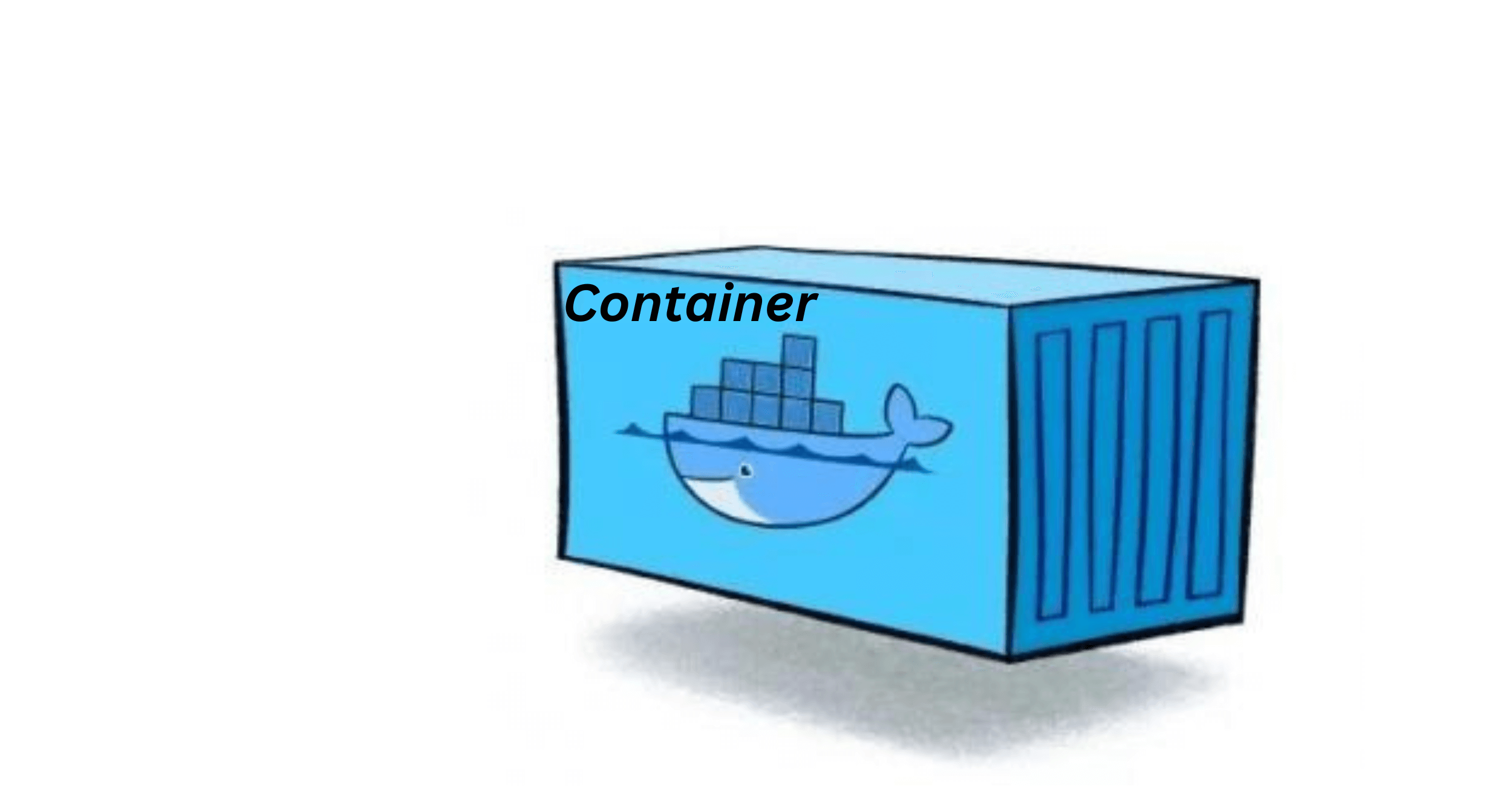

What is the difference between container and Virtual machine?

| Aspect | Containers | Virtual Machines (VMs) |

|---|---|---|

| Isolation Level | Process-level isolation. Share OS kernel | Full OS-level virtualization. Each VM has its OS |

| Resource Overhead | Lightweight, minimal overhead | Heavier, as each VM includes a complete OS stack |

| Performance | Generally higher performance due to shared kernels | May have slightly lower performance due to overhead |

| Start Time | Quick startup time | Longer startup time requires OS boot |

| Resource Utilization | Higher density, more containers on a host | Lower density, each VM consumes more resources |

| Portability | Highly portable. Can run consistently across environments | Less portable, VMs may need conversion between hypervisors |

| Scaling | Scales easily by spawning additional containers | Scaling involves provisioning new VM instances |

| Snapshot & Backup | Snapshots are lightweight and quick | Snapshots are larger and may take more time |

| Security | Shared kernels pose potential security risks | Improved isolation, often considered more secure |

| Use Cases | Microservices, lightweight applications | Legacy applications, diverse operating systems |

| Orchestration Tools | Kubernetes, Docker Swarm, etc. | VM orchestration tools like VMware, Hyper-V, etc. |

Hypervisor is a software that can be installed on a virtual machine. On one server using logical isolation we can create many virtual machines by that, efficient usage of servers takes place. Examples of Hypervisor are Vmware, Xen.

Every virtual machine has its own CPU, memory and hardware. Advanced versions of virtual machines are containers.

What is Docker Container?

A Docker container is a lightweight, standalone, and executable package that includes everything needed to run a piece of software, including the code, runtime, libraries, and system tools. Docker containers provide a consistent and reproducible environment, making it easy to develop, deploy, and scale applications across various environments.

Docker containers have become ubiquitous in the software industry. Although Docker, both as a project and a company, played a significant role in the widespread adoption of containers. Docker became the most popular containerization platform because portable artifacts were easily shared and moved around between development and operations teams. With Docker, launching a sophisticated application locally, or on any machine, can be accomplished in under five minutes with following docker practices.

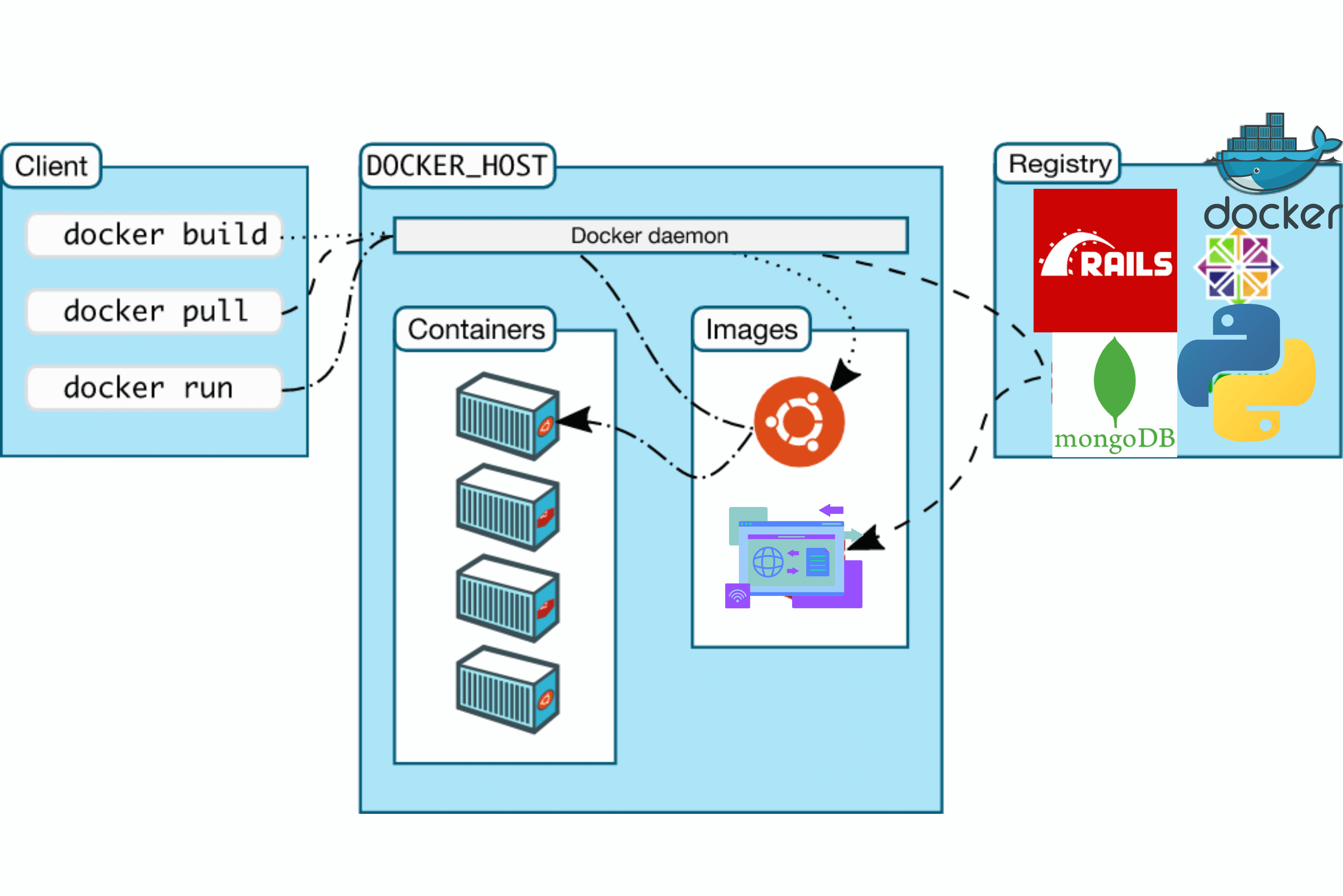

Explain Docker container architecture?

Understanding docker architecture is essential for effectively working with Docker. Docker contains three main components: docker client, docker host and docker registry.

What is Docker client?

Docker client is the command-line tool that allows users to interact with Docker. The Docker client is the primary interface through which users interact with the Docker daemon. It accepts commands from the user and communicates with the Docker daemon, which then executes the requested actions. The client can run locally on the host or connect to a remote Docker daemon.

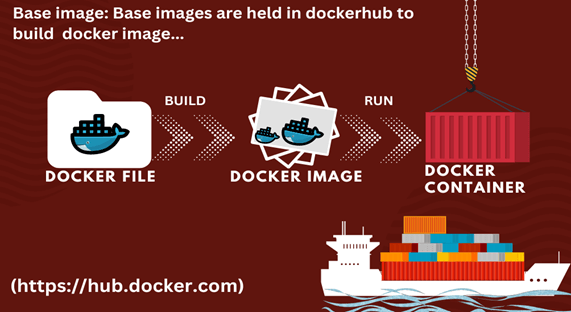

What is Dockerfile and how to create it?

Docker file: Dockerfile is a blueprint of an application we are going to develop; docker file is to build a docker image.

Docker file contains

-

FROM image(every docker file is from a base image).

-

ENV: Environmental variables allow you to configure various aspects of the container environment.

-

RUN with this run syntax it will execute or create a directory inside of a container.

-

COPY this is similar to the RUN command, but the copy command actually copies files from the host and executes inside the container image.

-

CMD command basically executes the entrypoint linux command.

| COMMAND | OVERVIEW |

|---|---|

| FROM | Specify base image |

| RUN | Execute specified command |

| ENTRYPOINT | Specify the command to execute the container |

| CMD | Specify the command at the time of container execution |

| ADD | Simple copy files/directories from host machine to container image |

| ENV | Add environment variables |

| EXPOSE | Open designed port |

| WORKDIR | Change current directory |

What is docker host:

The Docker host is the machine where Docker is installed and containers are executed. It runs the Docker daemon, which manages container operations.

What is Docker Daemon (dockerd):

The Docker daemon is a background process that manages Docker containers on a host system. It listens for Docker API requests and handles the creation, running, and monitoring of containers. The daemon communicates with the Docker CLI (Command Line Interface) and the REST API to execute commands and manage containers.

What is Docker Images or Container Images?

Docker image is a lightweight, standalone, and executable package that includes everything needed to run an application, including the code, runtime, libraries, and system tools. Images are created from a set of instructions in a Dockerfile and are the basis for running Docker containers.

How to create Docker images?

Creating Docker images involves defining a Dockerfile, a script-like set of instructions that specify how to build the image.

Here are the general steps to create a Docker image:

Step 1: Create a Dockerfile

1. Choose a Base Image:

Select a base image that provides the foundational operating system and environment for your application. For example, to use a minimal Alpine Linux image, start your Dockerfile with:

FROM alpine:latest

2. Define the Working Directory

Set the working directory inside the container. This is where your application code and files will be copied.

WORKDIR /app

3. Copy Application Files:

Copy your application code into the container.

COPY . .

4. Install Dependencies:

If your application requires dependencies, install them using the appropriate package manager.

RUN apk --no-cache add python3

5. Specify Application Commands:

Define the commands that will run your application. This can include start-up commands, entry points, or default commands.

CMD ["python3", "app.py"]

Step 2: Build the Docker Image

1. Navigate to the Directory with Dockerfile:

Open a terminal and navigate to the directory containing your Dockerfile.

2. Build the Docker Image:

Use the docker build command to build the Docker image. Provide a -t flag to tag the image with a name and version.

docker build -t myapp:latest.

Replace myapp with your desired image name, and latest with the version tag.

3. View the Built Image:

After a successful build, you can view the list of Docker images on your system.

docker images

Step 3: Run the Docker Image

1. Run the Docker Image:

Use the docker run command to create and start a container based on the image you just built.

docker run -p 8080:80 myapp:latest

This example maps port 8080 on your host machine to port 80 inside the container.

2. Access the Application:

Open a web browser or use a tool like curl to access your application running inside the container.

curl http://localhost:8080

Step 4:- Push to Docker Registry:

If you want to share your Docker image with others or deploy it to a remote environment, you can push it to a Docker registry like Docker Hub.

docker push myusername/myapp:latest

Replace myusername with your Docker Hub username and adjust the image name and version accordingly.

By following these steps, you can create a Docker image for your application, making it easy to share, distribute, and deploy consistently across different environments.

What is command to build docker image:

Command to build docker image from docker file

Docker build -t “image name”:tag.

Docker images (To check images list)

What is a Container Registry:

Docker container: Docker container encapsulates an application across various environments. Containers are based on Docker images

Command to create docker container

docker run <options> <image>

Where to store Docker Images?

Docker images can be stored in various places, depending on your use case, security requirements, and operational needs. Here are several options:

| Image | Description and Link |

|---|---|

|

Docker Hub: The default public registry provided by Docker. It allows you to store, share, and distribute Docker images. You can create both public and private repositories on Docker Hub. Link - https://hub.docker.com/ |

|

Amazon Elastic Container Registry (ECR): Amazon ECR is a fully managed container registry provided by AWS. It integrates seamlessly with other AWS services and allows you to store, manage, and deploy Docker images on AWS infrastructure. Link - https://aws.amazon.com/ecr/ |

|

Google Container Registry (GCR): Google Container Registry is a private container registry provided by Google Cloud. It’s integrated with Google Cloud Platform services and allows you to store Docker images securely. Link - https://cloud.google.com/ |

|

Azure Container Registry (ACR): Azure Container Registry is a private container registry provided by Microsoft Azure. It enables you to store and manage Docker images for use with Azure services. Link - https://azure.microsoft.com/en-in/products/container-registry |

|

JFrog Artifactory: JFrog Artifactory is a universal binary repository manager that supports Docker images. It provides fine-grained access control and integrates with CI/CD tools. Link - https://jfrog.com/ |

|

IBM Cloud Container Registry: IBM Cloud Container Registry is a registry service on the IBM Cloud. It provides a secure and scalable platform for storing and managing Docker images. Link - https://www.ibm.com/cloud/ |

|

GitLab Container Registry: GitLab Container Registry is integrated into GitLab and allows you to store Docker images within your GitLab instance. It provides versioning, access control, and continuous integration features. Link - https://about.gitlab.com/ |

|

Harbor: Harbor is an open-source registry you can install almost anywhere, but is particularly suited to Kubernetes. It’s compatible with most cloud services and Continuous Integration and Continuous Delivery (CI/CD) platforms, and it’s a good on-premises solution too. Link - https://goharbor.io/ |

|

Red Hat Quay: Red Hat Quay offers private container registries only. This makes it a suitable option for enterprise-level customers in particular. Cloud provider agnostic, Quay is easy to connect to systems at either end of your DevOps pipeline. Link - https://www.openshift.com/products/quay |

|

Alibaba Cloud Container Registry: ACR is a fully managed container registry service provided by Alibaba Cloud. It offers a secure and scalable solution for storing, managing, and deploying Docker container images. Link - https://www.alibabacloud.com/product/container-registry |

|

DigitalOcean Container Registry: DOCR is a private Docker image registry with additional tooling support that enables integration with your Docker environment and DigitalOcean Kubernetes clusters. Link - https://www.digitalocean.com/ |

|

Portus: Portus is an open-source authorization service and user interface for Docker. It extends the functionality of the Docker registry by adding features like user management, access control, and enhanced visibility into the Docker images stored in the registry. Link - https://github.com/SUSE/Portus |

How to write an optimized Docker file?

Writing an optimized Dockerfile involves creating a file that not only efficiently builds a Docker image but also ensures that the resulting container is lightweight, secure, and follows best practices. Here are some tips for writing an optimized Dockerfile:

Step 1:- Use a Minimal Base Image:

Start with a minimal base image. Choose an image that contains only the essential components required for your application. Alpine Linux-based images are often a good choice due to their small size.

FROM alpine:latest

Step 2:- Group and Order Instructions:

Group related instructions together and order them to take advantage of Docker’s caching mechanism. This helps minimize the number of layers created during the build process.

# Install dependencies

RUN apk --no-cache add \

build-base \

python3

# Copy application code

COPY . /app

Step 3:- Minimize Layers:

Limit the number of layers in your Docker image. Each instruction in the Dockerfile creates a new layer. Combining related commands into a single RUN instruction reduces the number of layers.

RUN apk --no-cache add \

package1 \

package2 \

&& \

cleanup_commands \

&& \

more_cleanup_commands

Step 4:- Minimize Layers:

Limit the number of layers in your Docker image. Each instruction in the Dockerfile creates a new layer. Combining related commands into a single RUN instruction reduces the number of layers.

RUN apk --no-cache add \

package1 \

package2 \

&& \

cleanup_commands \

&& \

more_cleanup_commands

Step 5:- Clean Up Unnecessary Files:

Remove unnecessary files and artifacts after installing dependencies or building the application to reduce the image size.

# Remove unnecessary packages and cleanup

RUN apk del build-base && \

rm -rf /var/cache/apk/*

Step 6:- Use .dockerignore:

Create a .dockerignore file to exclude unnecessary files and directories from being copied into the Docker context. This helps reduce the build context and speeds up the build process.

node_modules

.git

.dockerignore

Step 7:- Specify Application Port

Explicitly specify the port your application listens on, and use the EXPOSE instruction in the Dockerfile. This provides documentation and enables easier port mapping when running the container.

EXPOSE 3000

Step 8:- Run as Non-Root User

Run the application as a non-root user to enhance security. Create a non-root user and use the USER instruction to switch to that user.

# Create a non-root user

RUN adduser -D myuser

# Set the user for subsequent commands

USER myuser

Step 9:- Use Environment Variables

Utilize environment variables to parameterize your Dockerfile. This allows for flexibility and makes it easier to configure the container at runtime.

ARG NODE_ENV=production

ENV NODE_ENV $NODE_ENV

Step 10:- Enable BuildKit:

Use BuildKit, Docker’s next-generation builder, by setting the DOCKER_BUILDKIT=1 environment variable. BuildKit provides improved performance, parallelism, and additional features.

# syntax=docker/dockerfile:experimental

FROM node:14 as builder

...

Remember that optimization considerations may vary based on the specific requirements of your application and deployment environment. Regularly review and update your Dockerfiles as your application evolves to ensure ongoing optimization.

Why do we need a container registry?

A container registry plays a crucial role in any successful container management strategy.

Let me explain why:

1. Centralized Storage and Management:

-

A container registry serves as a catalog of storage locations where you can push and pull container images.

-

These images are stored in repositories within the registry.

-

Each repository contains related images with the same name, representing different versions of a container deployment.

-

For instance, on Docker Hub, the repository named “nginx” holds various versions of the Docker image for the open-source web server NGINX.

2. Types of Container Registries:

-

Docker Hub: Docker’s official image resource provides access to over 100,000 off-the-shelf images contributed by software vendors, open-source projects, and the Docker community.

-

Self-Hosted Registries: Organizations that prioritize security, compliance, or low latency often prefer hosting their container images on their own infrastructure. Self-hosted registries allow complete control over image management.

-

Third-Party Registry Services: These managed offerings provide control over image management without the operational burden of running an on-premises registry.

3. Key Benefits:

-

Efficient Access: Container registries offer reliable, consistent, and efficient access to your container images.

-

Integration with CI/CD Workflows: They seamlessly integrate into your Continuous Integration (CI) and Continuous Delivery (CD) workflows.

-

Built-In Features: Registries provide features like image security, integrity checks, and version control.

-

Space Optimization: They share common layers across images, optimizing storage space.

-

Image Cleanup: Regular housekeeping ensures you manage only necessary images

What are the Advantages and disadvantages of docker registries:

Docker registries play a crucial role in the container ecosystem, offering a centralized location for storing, managing, and distributing Docker images. However, like any technology, Docker registries have both advantages and disadvantages.

Advantages of Docker Registries:

1. Centralized Image Storage:

Registries provide a centralized repository for storing Docker images, ensuring a single source of truth for your organization’s containerized applications.

2.Efficient Image Distribution:

Registries support efficient distribution of images, enabling quick and consistent deployment across multiple environments. Images are pulled only once and cached for subsequent deployments.

3. Access Control:

Docker registries offer access control mechanisms, allowing organizations to control who can push, pull, or modify images. This is crucial for enforcing security policies and restricting access to sensitive images.

4. Security Features:

Many registries provide built-in security features such as vulnerability scanning, content trust, and image signing. These features help identify and address security issues before deployment.

5. Versioning and Tagging:

Registries support versioning and tagging of images, allowing teams to manage and track changes over time. This helps in maintaining a history of image versions.

6. Scalability:

Docker registries are designed to scale, supporting the storage and distribution of a large number of images. This is crucial for organizations with growing containerized applications.

7. Integration with CI/CD:

Registries seamlessly integrate with CI/CD pipelines, enabling automated building, testing, and deployment of containerized applications. This facilitates a smooth and automated development workflow.

8. Content Trust and Image Signing:

Registries support content trust mechanisms and image signing, ensuring the integrity and authenticity of images. This helps prevent the use of tampered or malicious images.

9. Metadata Management:

Docker registries store metadata associated with images, including tags, labels, and descriptions. This metadata provides additional information about the content and purpose of each image.

Disadvantages of Docker Registries:

1. Dependency on External Service:

Organizations using external registries, such as Docker Hub, may face disruptions if there are issues with the external service. This dependency can impact development and deployment workflows.

2. Storage Costs:

Depending on the size and number of images, storage costs for Docker registries can become a factor, especially when dealing with large-scale deployments.

3. Latency in Image Pulling:

Pulling images from external registries may introduce latency, affecting the speed of application deployment, especially in scenarios with limited bandwidth.

4. Potential for Image Bloat:

If not managed properly, registries may accumulate unused or outdated images, leading to image bloat. Regular maintenance is required to clean up unnecessary images.

5. Limited Offline Access:

In certain environments, especially those with limited internet access, relying on external registries may pose challenges. Private or self-hosted registries can address this limitation.

6. Complexity in Setup and Maintenance:

Setting up and maintaining a private registry, especially with additional features like authentication and security measures, can add complexity to the infrastructure.

7. Potential Security Risks:

While Docker registries enhance security, misconfigurations or lax access control can introduce security risks. Regular auditing and adherence to best practices are necessary to mitigate these risks.

Conclusion:

Docker registries provide essential benefits for managing containerized applications, but organizations should carefully consider their specific needs, security requirements, and operational constraints when choosing and utilizing Docker registries. Proper planning and adherence to best practices are crucial to maximizing the advantages while addressing potential disadvantages. Follow RazorOps Linkedin Page Razorops, Inc.